- cross-posted to:

- hackernews@derp.foo

- cross-posted to:

- hackernews@derp.foo

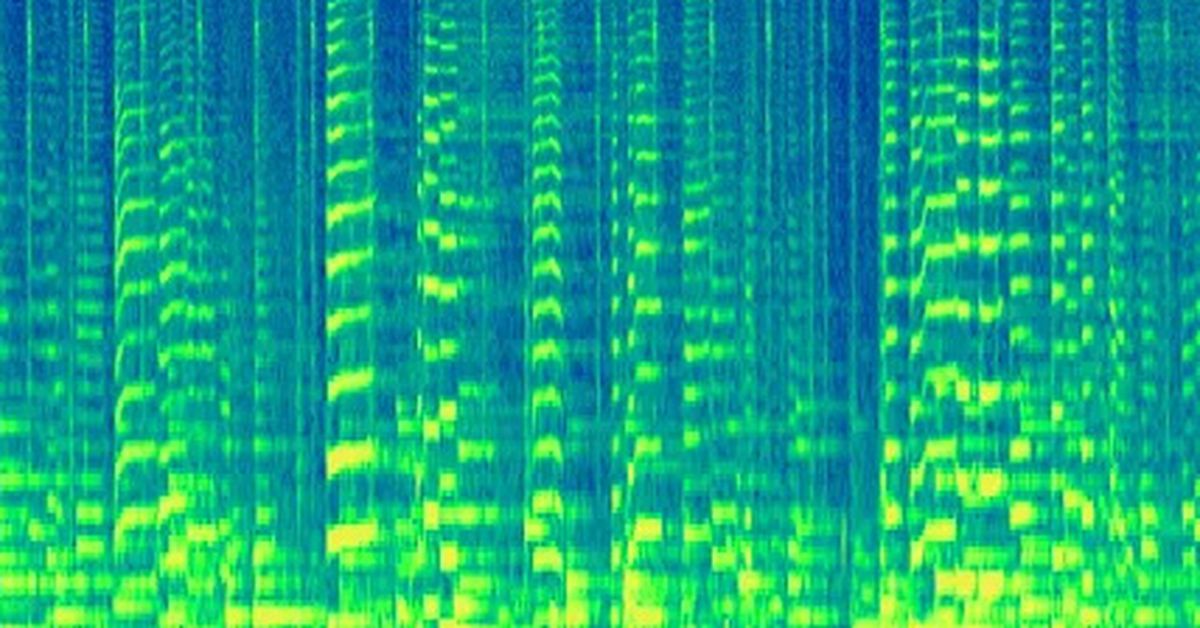

Google is embedding inaudible watermarks right into its AI generated music::Audio created using Google DeepMind’s AI Lyria model will be watermarked with SynthID to let people identify its AI-generated origins after the fact.

This assumes music is made and enjoyed in a void. It’s entirely reasonable to like music much more if it’s personal to the artist. If an AI writes a song about a very intense and human experience it will never carry the weight of the same song written by a human.

This isn’t like food, where snobs suddenly dislike something as soon as they find out it’s not expensive. Listening to music often has the listener feel a deep connection with the artist, and that connection is entirely void if an algorithm created the entire work in 2 seconds.

That’s a parasocial relationship and it’s not healthy, sure Taylor Swift is kinda expressing her emotions from real failed relationships but you’re not living her life and you never will. Clinging to the fantasy of being her feels good and makes her music feel special to you but it’s just fantasy.

Personally I think it would be far better if half the music was ai and people had to actually think if what their listing to actually sounds good and interesting rather than being meaningless mush pumped out by an image obsessed Scandinavian metal nerd or a pastiche of borrowed riffs thrown together by a drug frazzled brummie.

Lol, somehow you got the above commenter covering the sentiment that a song is better if it’s message is true to its creator…something a huge percentage of the population would agree with, and you equate that to fan obsession.

People on the internet are wild.

I don’t understand where they got any of that from, lol. It’s like they learned what a parasocial relationship is earlier today and they thought it applied here

I would kind of agree with this if it wasn’t kind of mean and half of it didn’t come out of nowhere, but then it also seems like what you think you value in your own music taste is whether or not something is new, seeing as your main examples of things that are meaningless or bad is “image obsessed scandinavian metal nerd” i.e. derivative and “pastiche of borrowed riffs thrown together by a drug frazzled brummie” i.e. derivative.

Ha no you are right, I was being a dick - i worked long enough in the music industry that it’s scarred my soul and just thinking of it brings up that bile…

But yeah I was just being silly with the band descriptions, I was describing some of the music I like in a flippant way to highlight the absurdity of claiming some great artistic value because Ozzy mumbled about iron man traveling time for the future of mankind - dice could come up with more meaningful lyrics than ‘nobody helps him, now he has his revenge’ is the sort of thing an edgy teenage coke head would come up with – it’s one of my favourite songs of all time, another example of greatest songs of all time is Rasputin by boney m, famously part of a big controversy when people discovered they were a manufactured band and again the lyrics and music are both brilliant and awful.

People obsess over nonsence all the time, it’s easy to pretend there’s some deep and holy difference between Bach and Offenbach but the cancan can mean just as much as any toccata if you let it.

Art is in the eye of the beholder, it has always been thus and will always be thus.

deleted by creator

I can’t tell if you’re completely missing the point on purpose, or if you actually don’t understand what I mean lol. Who said anything about Taylor Swift?

You can replace her with whatever music you associate with, what I’m getting at is your connection to it isn’t real - it feels real but that’s because it’s coming from you, you’re putting the meaning in there.

If you could erase all memory of Bach from a classical obsessives mind then play them his greatest hits and say it’s from an AI they’d say ‘ugly key smashing meaningless drivel’ maybe they’d admit AI Brahms has some bangers but without the story behind it and the history of its significance it’s not as magical.

The problem I have is people are to addicted to shortcuts, ‘oh this is Bach people say he’s great so this cello suite must be good therefore I like it’ it’s lazy and dumb. (I use Bachs cello suite as an example because it’s what’s on the radio but you can put any bit of music as the example)

What if an AI writes a song about its own experience? Like how people won’t take its music seriously?

It will depend on whether or not we can empathize with its existence. For now, I think almost all people consider AI to be just language learning models and pattern recognition. Not much emotion in that.

That’s because they are just that. Attributing feelings or thought to the LLMs is about as absurd as attributing the same to Microsoft Word. LLMs are computer programs that self optimise to imitate the data they’ve been trained on. I know that ChatGPT is very impressive to the general public and it seems like talking to a computer, but it’s not. The model doesn’t understand what you’re saying, and it doesn’t understand what it is answering. It’s just very good at generating fitting output for given input, because that’s what it has been optimised for.

Glad you’re at least open to the idea.

“I dunno why it’s hard, this anguish–I coddle / Myself too much. My ‘Self’? A large-language-model.”

I noticed some of your comments are disappearing from this thread, is that you or mods?

I’m getting nuked from another thread

Language models dont experience things, so it literally cannot. In the same way an equation doesnt experience the things its variables are intended to represent in the abstract of human understanding.

Calling language models AI is like calling skyscrapers trees. I can sorta get why you could think it makes sense, but it betrays a deep misunderstanding of construction and botany.

What makes your experiences more valid than that of AI?

It is not a measure of validity. It is a lack of capacity.

What is the experience of a chair? Of a cup? A drill? Do you believe motors experience, while they spin?

Language models arent actual thought. This isnt a discussion about if non organic thought is equivalent to organic thought. Its an equation, that uses words and the written rules of syntax instead of numbers. Its not thinking, its a calculator.

The only reason you think a language model can experience is because a marketing man missttributed it the name “AI.” Its not artificial intelligence. Its a word equation.

You know how we get all these fun and funny memes where you rephrase a question, and you get a “rule breaking” answer? Thats because its an equation, and different inputs avoid parts of the calculation. Thought doesnt work that way.

I get that the calculator is very good at calculating words. But thats all it is. A calculator.

What makes your thoughts ‘actual thought’ and not just an algorithm?

Personally, I choose to believe that the people around me are real. In theory, you can’t trust anyone but yourself. I know language models don’t have humanity. I guess that’s the difference.

Yeah man. You can choose to believe whatever you want.

Some food for thought: https://www.youtube.com/watch?v=2L9RZYguI0Q

Oh, thats easy. Im not an equation running on a calculator.

You only have thoughts because of electricity.

Do you believe in the existence of a soul or some other god-gene that separates us from machines?

No one said anything about electricity. A calculator can exist on paper, or stones on sticks.

No one said anything about souls. Please dont make up shit no one said.

I am not an equation. I do not take X input to produce Y output. My thoughts do not require outside stimuli. My thoughts do not give the same output for the same input. I can think, and ambulate and speak, inside a dark room with no stimulus based entirely on my own thoughts.

Chatgpt, and other language models, are equations. They trick you by using random number generation to simulate new outputs to repeat inputs, but if you open the code running the equation and learn how to fix the rng to a set value, you get the same outputs for each input.

Its not thought. Its an equation.

I am not saying non organic thought isnt possible. I am saying that a salesman pointed at a very very very big calculator and said “it definitely thinks! Its more than an equation!” And you, along with a lot of news outlets, fell for it.

We do not have machine brains yet. Someone just tried to sell calculators as if they were.

Oddly, I’d find a piece of music written by an ai convinced it was a chair extremely artistic lol. But yeah, just because the algorithm that’s really good at putting words together is trying to convince you it has feelings, doesn’t mean it does.