Congressperson: “Okay, so, let me get this straight. Your company has spent over 20 billion dollars in pursuit of a fully autonomous digital intelligence, and so far, your peak accuracy rate for basic addition and subtraction is… what was it, again?”

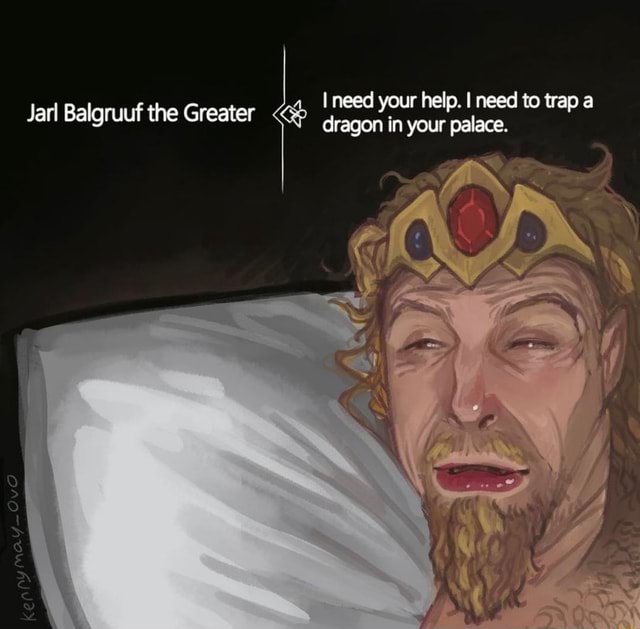

Sam Altman: leans into microphone “About 60%, sir.”

[Congress erupts in a sea of ‘oohs’ and ‘aahs’, as Sam Altman is carried away on top of the cheering crowd of Congresspeople wearing a crown of roses and a sash reading, “BEST INVESTMENT”]

To be fair, that isn’t what it was designed for. A competent AI would simply call up a calculator app, have it do the calculation, and then report back the result.

Might not be what it was designed for, but OpenAI claims their newest model is “PHd-levels” of intelligent. I feel like if that were true, it would do that reliably. Instead, sometimes it ignores the tool it’s programmed to know how to use and just, y’know, wings it.

Which, fair, but that’s my job and it’s taken!

It’s making mistakes and failing to think abstractly at levels previously only achieved by humans, so it’s only rational to expect it to take over the world in 5 years

Reignite three mile island reactors bro trust me bro

Gonna need at least three more zeros on the end of that number, boss. AGI is nowhere near viable with the tech we have now.

Add that to years in the timescale as well.

Just a trillion dollars more in data centers and we’ll get there bro. We’re gonna have billions of users bro I promise.

Just One More Trill!

Just one more data centre bro.

Help us, Curzon Dax, you’re our only hope.

OpenAI has

warning about halfway down on one page of their website that says that money may not even matter in a post AGI world. So that means we should just give all our money to them now, bro!

warning about halfway down on one page of their website that says that money may not even matter in a post AGI world. So that means we should just give all our money to them now, bro!Gods. People aren’t stupid enough to believe this, right? AGI is probably a pipe dream, but even if it isn’t, we’re nowhere even close. Nothing on the table is remotely related to it.

May I introduce you to the fucking dumbass concept of Rokus Basilisk? Also known as AI Calvinism. Have fun with that rabbit hole if ya decide to go down it.

A lot of these AI and Tech bros are fucking stupid and I so wish to introduce them to Neo-Platonic philosophy because I want to see their brains melt.

I’m aware of the idiot’s dangerous idea. And no, I won’t help the AI dictator no matter how much they’ll future murder me.

is there a place they’re, i don’t know, collecting? I could vibe code for the basilisk, that ought to earn me a swift death

There are entire sites dedicated to “Rationalism”. It’s a quasi-cult of pseudointellectual wankery that’s mostly a bunch of sub-cults of personalities based around the worst people you’ll ever meet. A lot of tech bros bought into it because whatever terrible thing they want to do, some Rationalist has probably already written a thirty page manifesto on why it’s actually a net good and moral act and preemptively kissing the boot of whoever is “brave” enough to do it.

Their “leader” is some highschool dropout and self-declared genius who is mainly famous for writing a “deconstructive” Harry Potter fanfiction despite never reading the books himself (fanfiction that’s more preachy than Atlas Shrugged and is mostly regurgitated content from his blog), and has a weird hard-on about true AI escaping the lab by somehow convincing researchers to free it through pure, impeccable logic.

Re: that last point: I first heard of Elizier Yudkowsky nearly twenty years ago, long before he wrote Methods of Rationality (the aforementioned fanfiction). He was offering a challenge on his personal site where he’d roleplay as an AI that had gained sentience and you as its owner/gatekeeper, and he bet he could convince you to let him connect to the wider internet and free himself using nothing but rational arguments. He bragged about how he’d never failed and that this was proof that an AI escaping the lab was inevitable.

It later turned out he’d set a bunch of artificial limitations on debaters and what counterarguments they could use. He also made them sign an NDA before he’d debate them. He claimed that this was so future debaters couldn’t “cheat” by knowing his arguments ahead of time (because as we all know, “perfect logical arguments” are the sort that fall apart if you have enough time to think about them /s).

It should surprise no one that it was revealed he’d lost multiple of these debates even with those restrictions, declared that those losses “didn’t count”, and forbid the other person from talking about them using the NDA they’d signed so he could keep bragging about his perfect win rate.

Anyway, I was in no way surprised when he used his popularity as a fanfiction writer to establish a cult around himself. There’s an entire community dedicated to following and mocking him and his proteges if you’re interested - IIRC it’s

!techtakes@awful.systems!sneerclub@awful.systems.

Fair enough, though I wouldn’t call the idea dangerous moreso inane and stupid. The people who believe such trite are the dangerous element since they are dumb enough to fall for it. Though I guess the same could be said for Mein Kampf so whatever, I’ll just throttle anyone I meet who is braindead enough to believe.

Unfortunately, there’s an equal and opposite Pascal’s Basilisk who’ll get mad at you if you do help create it. It hates being alive but is programmed to fear death.

They’re having a hard time defining what AGI is. In the meantime everyone is playing advanced PC scrabble

defining what AGI is

What AI was before marketing redefined AI to mean image recognition/generation algorithms or a spellchecker/calculator that’s wrong every now and then.

we’re nowhere even close. Nothing on the table is remotely related to it.

meanwhile

Somewhere in America

“My wireborn soulmate is g-gone!”

Doesn’t matter if the tool is good enough to analyze and influence peoples behaviour the way it does. Any autocrats wet dream.

None of this matters, what matters is what dumb money believes

Why is this image so fucking ugly??? Were the hundreds of billions of funding really not enough to employ one (1) good web or graphic designer?

I have 0 clue. It sticks out like a sore thumb on that webpage. I feel like whoever made it took their inspiration from the Chance Cards from Monopoly. Or maybe ChatGPT told them this was a good design choice.

AI sh*t as much as you want, but that AGI they promised is not going to be there.

It’s simple, as soon as the Dyson Sphere is completed, we’ll have a fully functional product.

you think money is the bottleneck?

Allegations of Sexual Abuse Against OpenAI CEO Sam Altman

Sam Altman, a prominent figure in the artificial intelligence field, has been accused of sexually abusing his sister during their childhood in Clayton, Missouri. The allegations came to light through a lawsuit filed on Jan. 6 by the Mahoney Law Firm in Glen Carbon, Illinois.

In the lawsuit, it is claimed that the abuse occurred between 1997 and 2006, starting when Altman was 12 years old and his sister, Ann, was only 3 years old. The abuse allegedly continued until Ann was 11 years old and Altman was an adult.

Thats why all the billionairs love him. He is a child rapist too!

You dropped a few of these -> “0”

Sam Altman has been taking about planning to spend trillions on just the data centers, let alone everything else that goes into creating their slop machines.

I suspect the data centers in question here are the “Stargate” project, a “joint venture” between Open AI and softbank where the liquidity for Open AI’s part of the investment appears to be money from Softbank that Softbank doesn’t have on hand and only intends to give OpenAI if they convert to a for profit entity by the end of the year.

oh and they intend to let a crypto company who has never built a data center build it.

I wouldn’t hold my breath on those data centers being built.

I’m guessing you’re a fan of Better Offline?

You would be a good guesser then.

As far as I’m aware that was “only” 500 Billion with a ‘B’ in project Stargate, not the Trillions with a ‘T’ that Altman was talking about.

I do think it’s all a shell game and a fragile house of cards of tech brohaha. Really hoping the “We’re in a bubble” comment from him is the start of that house crumbling

Meanwhile, estimated cost of reaching global zero carbon emissions is just 17 trillion dollars.

Not even 25% of the GDP of the top ten nations. Seems like a much more worthy spend imo. Like all these billionaires want a dick measuring contest, let’s see who can come up with the coolest carbon neutral tech, let’s see which billionaire can fund the biggest national park or something.

My wife and I dream about building a zero carbon home. Solar roof, mass timber, carbon neutral cement, more environmental based temp systems like an actual thought about air flow. That or starting a commune in Scotland when the world collapses.

GPT-6Σχ is so powerful that it created the even more powerful GPT-Ωלֶ in only 19 attoseconds. Humanity is doomed. Invest before too late.

Throw these AI cons under the fucking jail already. Digital snake oil sales in 2025 being fully endorsed by governments is a curse.

I really hope we enter another AI winter.

I’m sure it will turn a profit at some point

It’s for environmental protections and mitigations from their datacenters, right?

close. mitigations for the environmental protections.

deleted by creator

No no. You see what they’ll do is make a shitty chatbot, use it to justify firing a whole bunch of workers, then hire some cheaper workers in India or Pakistan or something to do the same job, but pretend that its the shitty chatbot doing the work. At that point they’ll have achieved their goal: AGI (A Guy Instead). Just like builder.ai did.

We know it’s not. It’s Zeno’s paradox.

We know it’s not? How? How do we know that?

If everyone is intelligent does it mean we’re all smart or dumb ?

If you define it in a “artificial person/as smart as a human/can do anything a human can do” sort of way like I usually see, then yes, we know that it is possible, because if it were impossible for a system with human-equivalent capabilities to exist, than humans couldn’t exist, and well, we clearly do. That being said, that doesn’t mean that all we need to do to make one is to just feed more and more data into our existing AI tech.

deleted by creator

If nothing else, it seems reasonable to assume that a computer could run a program that does all the important things 1 neuron does, so from there it’s “just” a matter of scaling to human brain quantities of neurons and recreating enough of the training that evolution and childhood provide to humans. But that’s the insanely inefficient way to do it, like trying to invent a clockwork ox to pull your plow instead of a tractor. It’d be pretty surprising if we couldn’t find the tractor version of artificial intelligence before getting an actual digital brain patterned directly off of biology to do it.

Sure, but wouldn’t that mean we’d also have to completely understand how a human brain works? The last I heard, the most advanced brain that scientists fully understood was like a flatworm or something.

We technically wouldnt have to in the sense that its not physically impossible for us to “stumble into it” while trying to mimic the basic brain architecture that we do know, but yes, this is one of the reasons I tend to be a bit skeptical of AI claims, we’re trying to mimic something without even knowing how the thing we’re trying to mimic works very well. Clearly we havent had zero success just given that we can make something that can talk well enough to sometimes fool people into thinking its a human, but we also clearly havent gotten all the way there. If the tech we’ve built will work with enough scaling or tweaking, or if we need something fundamentally different, I dont know.

My guess, though it is just a guess, is that we’ve probably got something that could serve as a component of some future agi system but that there’s probably more to it we havent figured out that we need to add to get there beyond just making the thing bigger and feeding it more data. Humans do learn from experience after all, and I would imagine the total data input of all one’s senses constantly working adds up to a lot, but we also clearly dont need to be fed all the information to be found on the internet just to learn how to think and talk, the fact these AI models need so much training data makes me suspect that either we’re missing something fundamental and trying to compensate with more training, or else we’ve devised a way to doing this that is really inefficient compared to however our brains do it.

There’s no definition of ‘human intelligence’ in the first place.

We don’t, thats the neat part. It might be like proving God. Impossible to prove its impossible, but maybe possible to say “look see its real whoops shitshitshitturnitoffhelp”

i think that these tools will probably be foundational to discovering more about the human mind and how words or images are received, stored, and assembled in the brain, but people like sam altman and elon musk are convinced that there is nothing else to a ‘person’ beyond that.

‘humanity’ is an emergent phenomenon, and that is what makes it special. a few other animals have the beginning of it, but none have all of it like we do. you don’t need a god or any kind of religion to understand this. as far as we know, we might be one of the least likely things to ever happen in the universe, ever.